MT that Deceives

Many popular MT systems, such as Google Translate or Bing Translator (for certain languages), are based purely on statistical models. Such models observe word and phrase co-occurrences in parallel texts and try to learn translation equivalents.

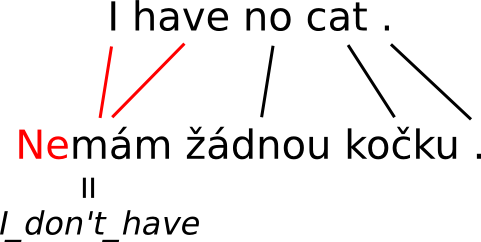

In some cases, this approach leads to systematic errors. The picture illustrates a common issue with negation -- in many languages (such as Czech), negation is expressed by a prefix ("ne" in this case). Moreover, Czech uses double negatives: the sentence Nemám žádnou kočku. corresponds to English I_do_not_have no cat. word by word. Therefore the automatic procedure learns a wrong translation rule I have=nemám. Whenever this rule is applied, the meaning of the translation is completely reversed.

Other examples of notorious errors include named entities, such as:

Jan Novák potkal Karla Poláka. -> John Smith met Charles Pole. (The name Novák is sometimes translated as Smith as both are examples of very common surnames in the respective language.)

There is also a disconnect when translating between a morphologically poor and a morphologically rich language. While the first tend to express argument roles using word order (think English), the latter often use inflectional afixes. A statistical system which simply learn correspondences between words and short phrases then fails to capture the difference in meaning:

Pes dává kočce myš. (the dog gives the cat a mouse)

Psovi dává myš kočku. (to the dog a mouse is given by the cat)

Psovi dává kočka myš. (to the dog, the cat gives a mouse)

All of these examples are translated identically by Google Translate at the moment, even though their meanings are clearly radically different.