Phrase-based Model: Difference between revisions

No edit summary |

No edit summary |

||

| Line 65: | Line 65: | ||

Marii ||| Mary | Marii ||| Mary | ||

This is an excerpt of the | This is an excerpt of the space where the decoder searches for the best translation. One (the most reasonable) full translation is illustrated but the decoder theoretically needs to evaluate all possible combinations. | ||

[[File:beam-search.png|600px]] | [[File:beam-search.png|600px]] | ||

=== Stacks, Pruning === | === Stacks, Pruning === | ||

It is obvious that with realistic sentence lengths and translation dictionaries, the search space very quickly explodes and it becomes impossible to go through all possible combinations of span translations and their orderings. | |||

== See Also == | == See Also == | ||

Revision as of 21:02, 7 April 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Phrase-based machine translation (PBMT) is probably the most widely used approach to MT today. It is relatively simple and easy to adapt to new languages.

Phrase Extraction

PBMT uses phrases as the basic unit of translation. Phrases are simply contiguous sequences of words which have been observed in the training data, they don't correspond to any linguistic notion of phrases.

In order to obtain a phrase table (a probabilistic dictionary of phrases), we need word-aligned parallel data. Using the alignment links, a simple heuristic is applied to extract consistent phrase pairs. Consider the word-aligned example sentence:

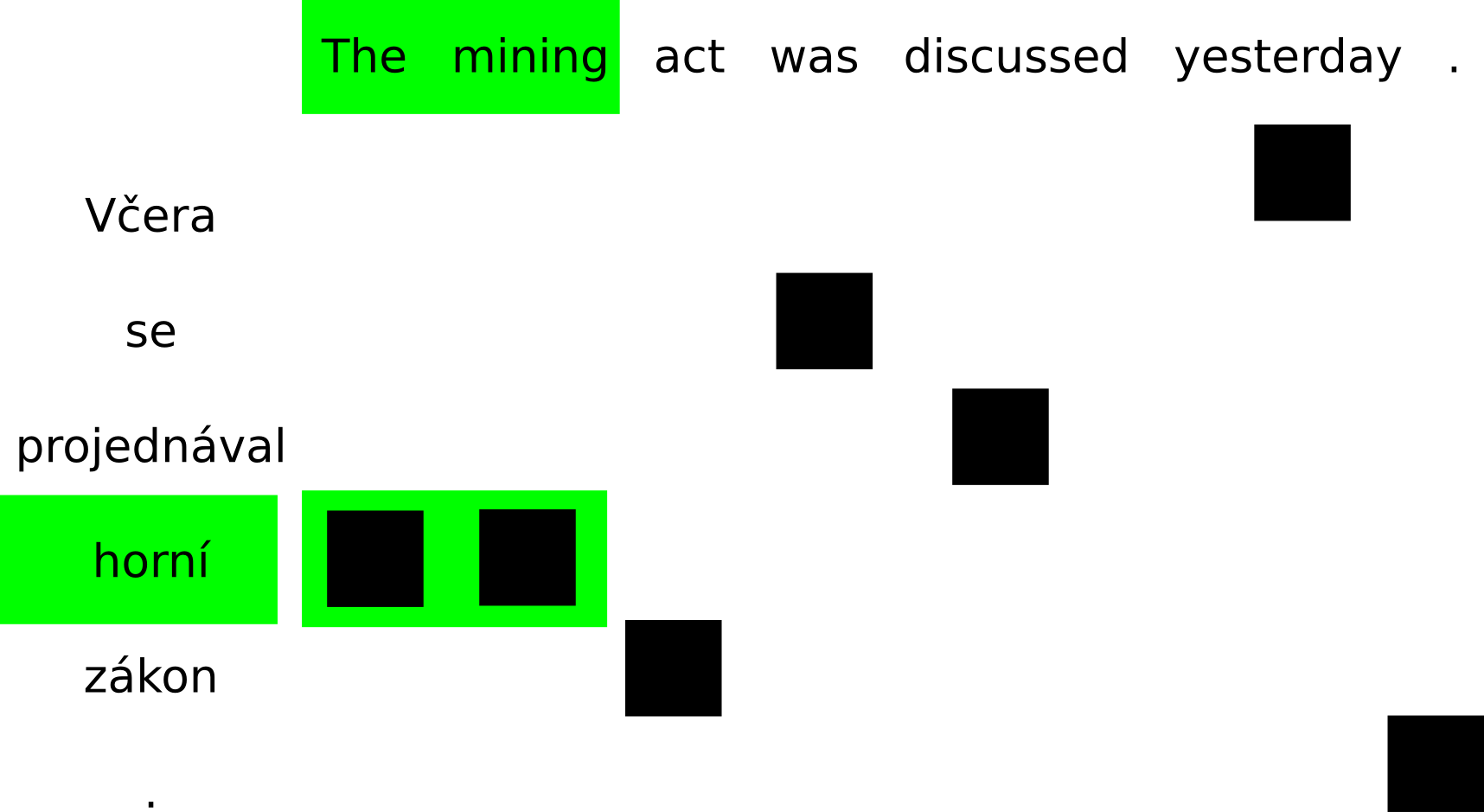

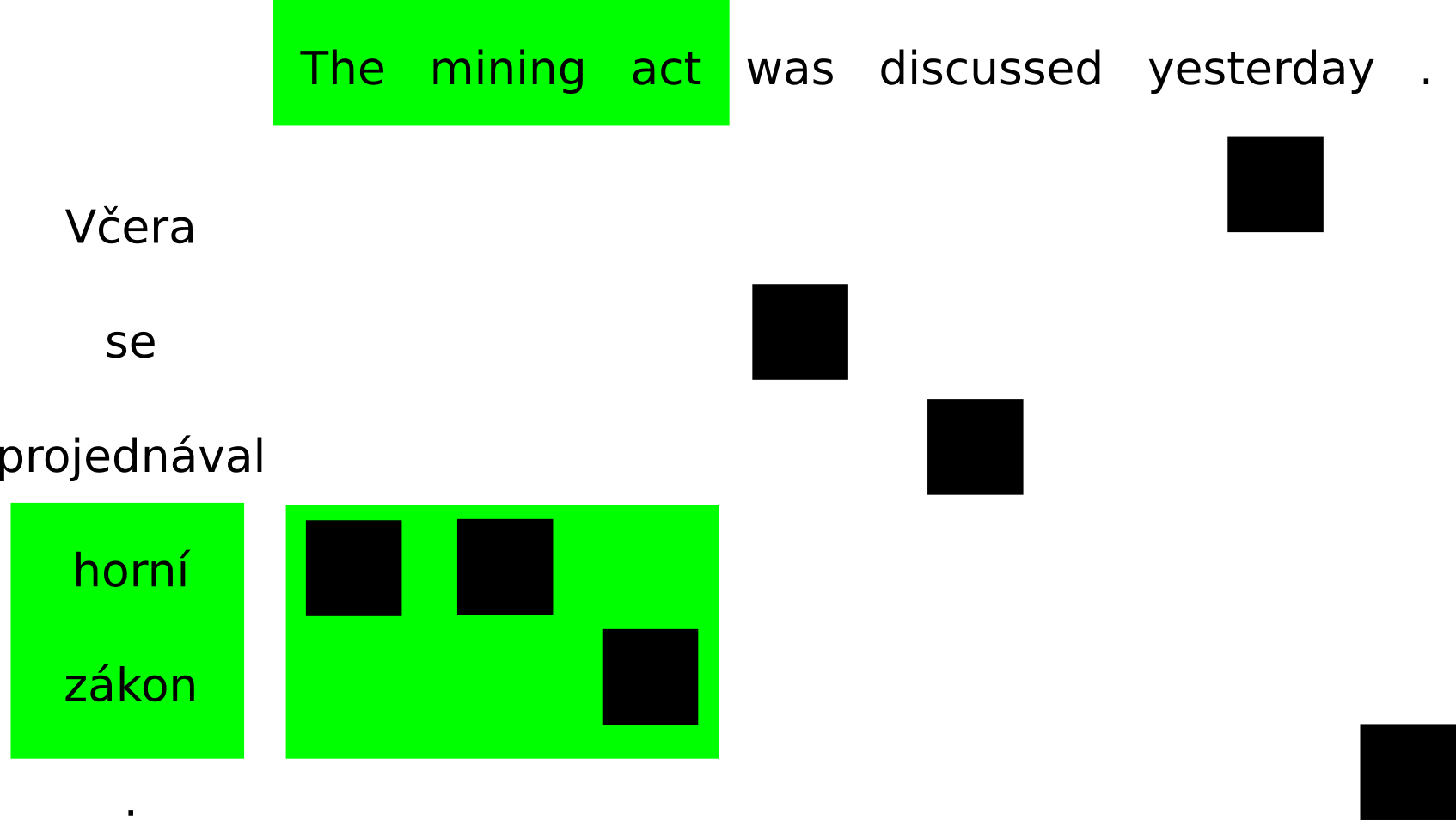

Phrase pairs are contiguous spans where all alignment points from the source side of the span fall within its target side and vice versa. These are examples of phrases consistent with this word alignment:

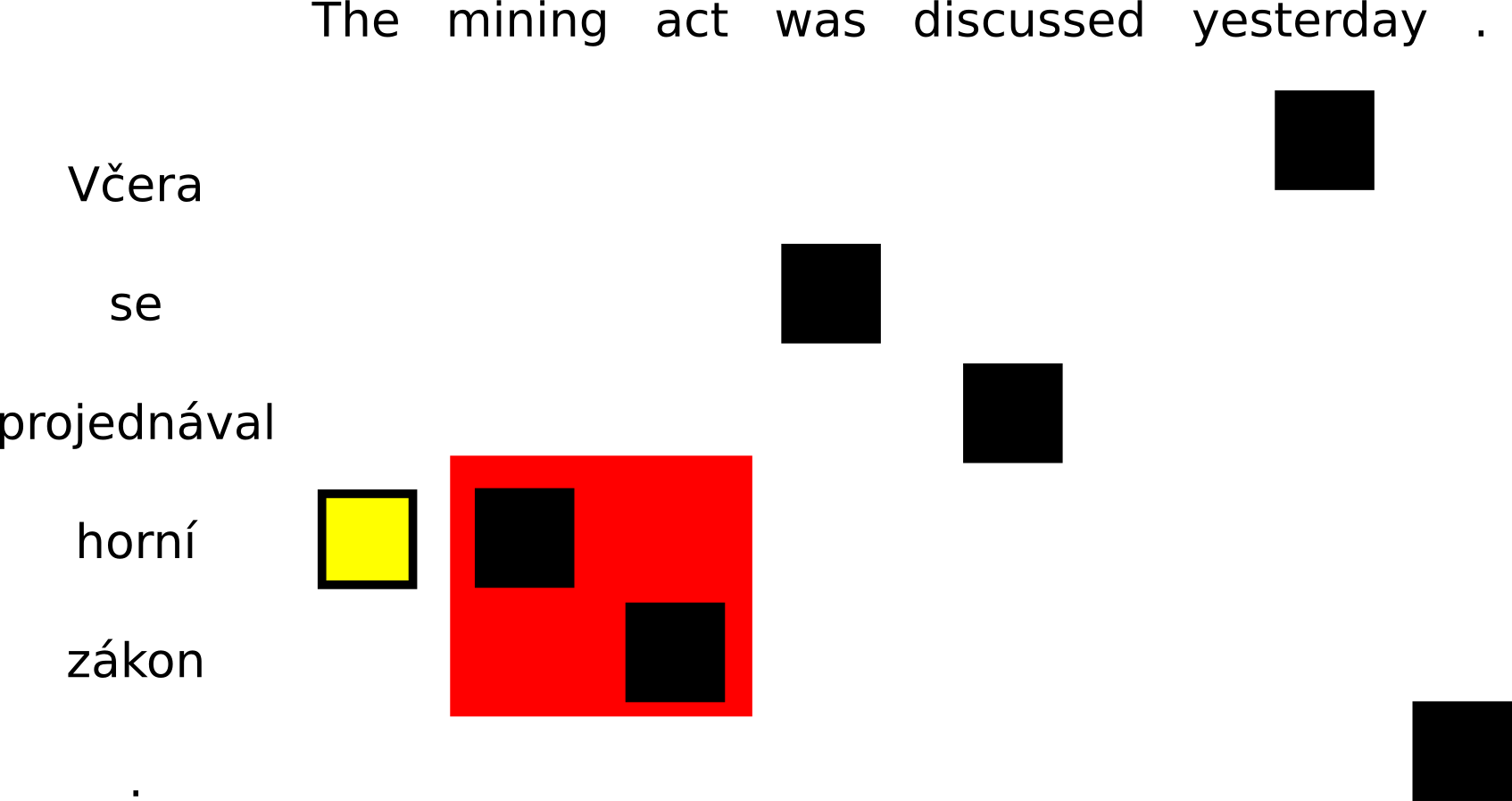

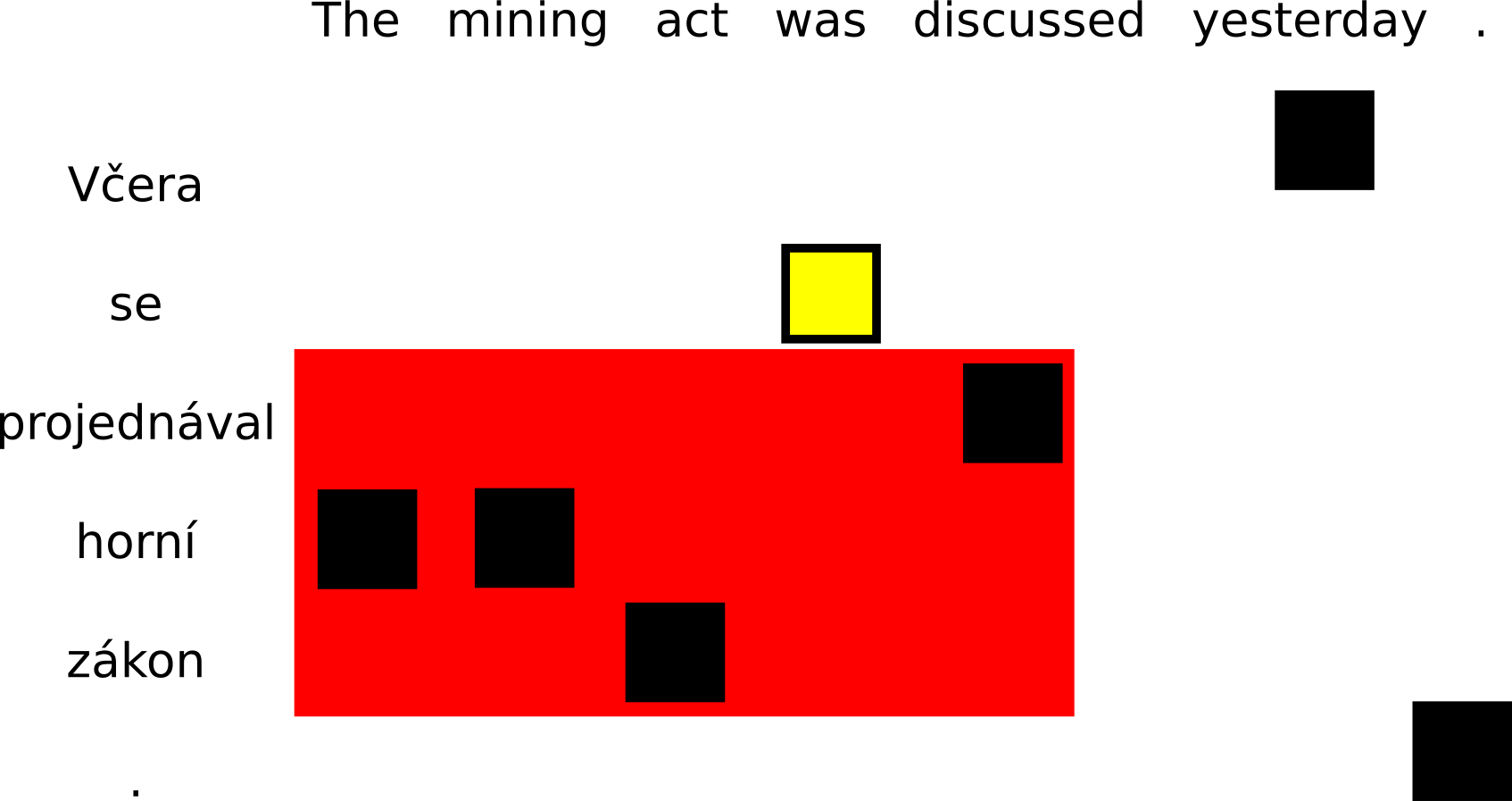

On the other hand, if either a source word or a target word is aligned outside of the current span, the phrase cannot be extracted. The conflicting alignment points are drawn in yellow:

In practice, only phrases up to a certain length are extracted (e.g. 7 words). Longer phrases would hardly ever be used by the translation model (unless it was presented with a sentence from the training data) and the phrase table would be extremely large.

Phrase Scoring

Once we have extracted all consistent phrase pairs from our training data, we can assign translation probabilities to them using maximum likelihood estimation. To estimate the probability of phrase being the translation of phrase , we simply count:

The formula tells us to simply count how many times we saw translated as in our training data and divide that by the number of times we saw in total.

In practice, other scores are also computed (e.g. ) but that's a topic for another lecture.

Decoding

When we get an input sentence for translation, the first step is to look up translation options (possible translations) for each source span in the phrase table. These can be thought of as jigsaw puzzle pieces which are combined to get as good final translation as possible. The task for the decoder (the translation program) is to find a combination which covers the whole input sentence and is the most probable according to the model (this procedure is usually called decoding).

Here we describe the stack-based beam search algorithm which is commonly used for phrase-based decoding, although other algorithms exist.

Overview

The search begins with an empty hypothesis: no part of the input is covered and nothing has been produced. We can start covering the input sentence by translating any span, and for each span, we can choose any of its possible translations. The decoder produces all of these partial hypotheses.

In the next step, we try to expand our partial translations further by covering the remaining parts of the input sentence. We continue expanding our partial hypotheses until we cover the whole source sentence. We choose the most probable translation.

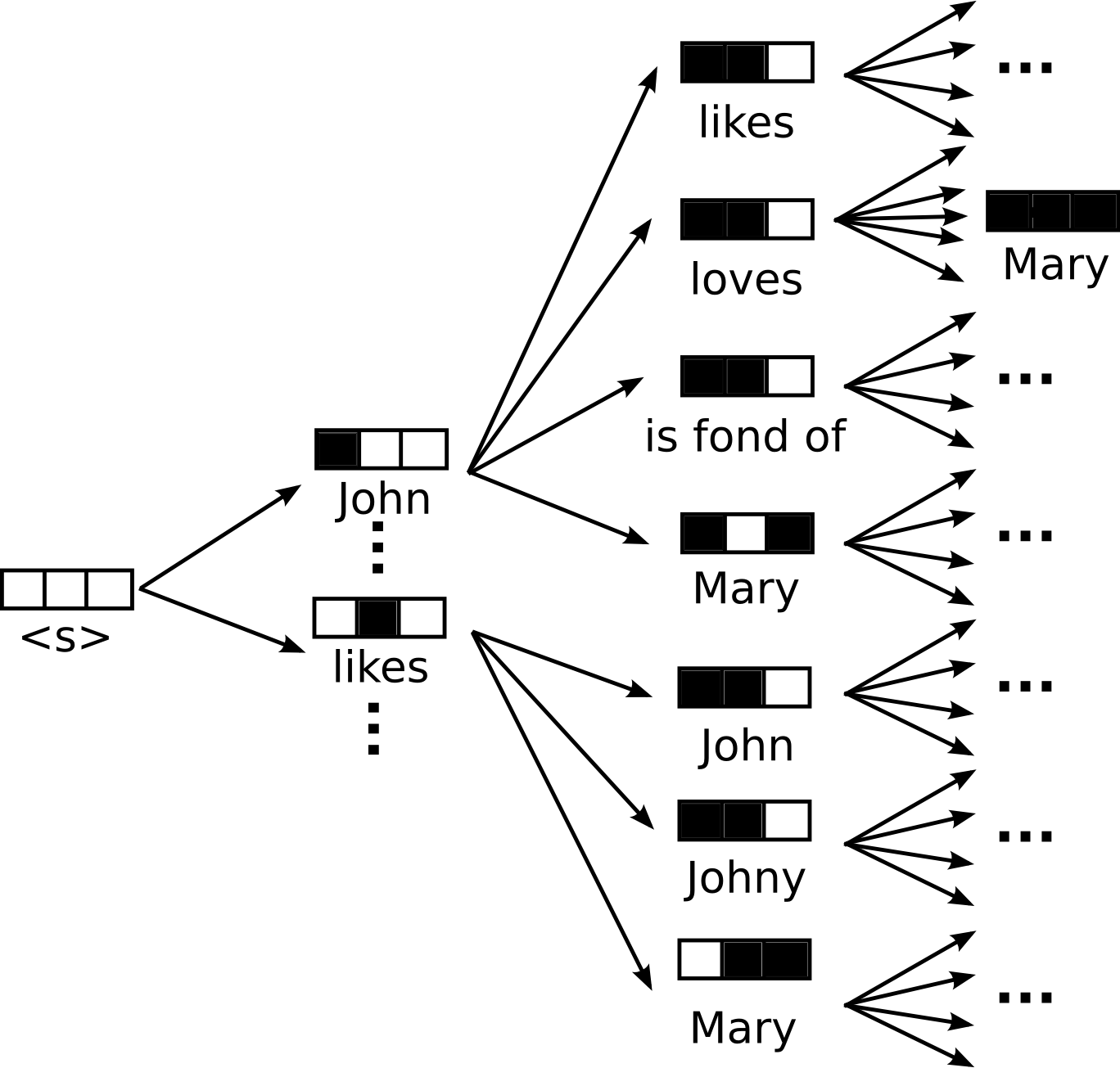

In the following example, we try to translate the Czech sentence:

- Honza miluje Marii

With the following phrase table:

Honza ||| John Honza ||| Johny miluje ||| loves miluje ||| is fond of miluje ||| likes Marii ||| Mary

This is an excerpt of the space where the decoder searches for the best translation. One (the most reasonable) full translation is illustrated but the decoder theoretically needs to evaluate all possible combinations.

Stacks, Pruning

It is obvious that with realistic sentence lengths and translation dictionaries, the search space very quickly explodes and it becomes impossible to go through all possible combinations of span translations and their orderings.