Phrase-based Model: Difference between revisions

No edit summary |

No edit summary |

||

| Line 33: | Line 33: | ||

P(e|f) = \frac{\text{count}(e,f)}{\text{count}(f)} | P(e|f) = \frac{\text{count}(e,f)}{\text{count}(f)} | ||

</math> | </math> | ||

The formula tells us to simply count how many times we saw <math>f</math> translated as <math>e</math> in our training data and divide that by the number of times we saw <math>f</math> in total. | |||

In practice, other scores are also computed (e.g. <math>P(f|e)</math>) but that's a topic for another lecture. | In practice, other scores are also computed (e.g. <math>P(f|e)</math>) but that's a topic for another lecture. | ||

Revision as of 15:21, 7 April 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Phrase-based machine translation (PBMT) is probably the most widely used approach to MT today. It is relatively simple and easy to adapt to new languages.

Phrase Extraction

PBMT uses phrases as the basic unit of translation. Phrases are simply contiguous sequences of words which have been observed in the training data, they don't correspond to any linguistic notion of phrases.

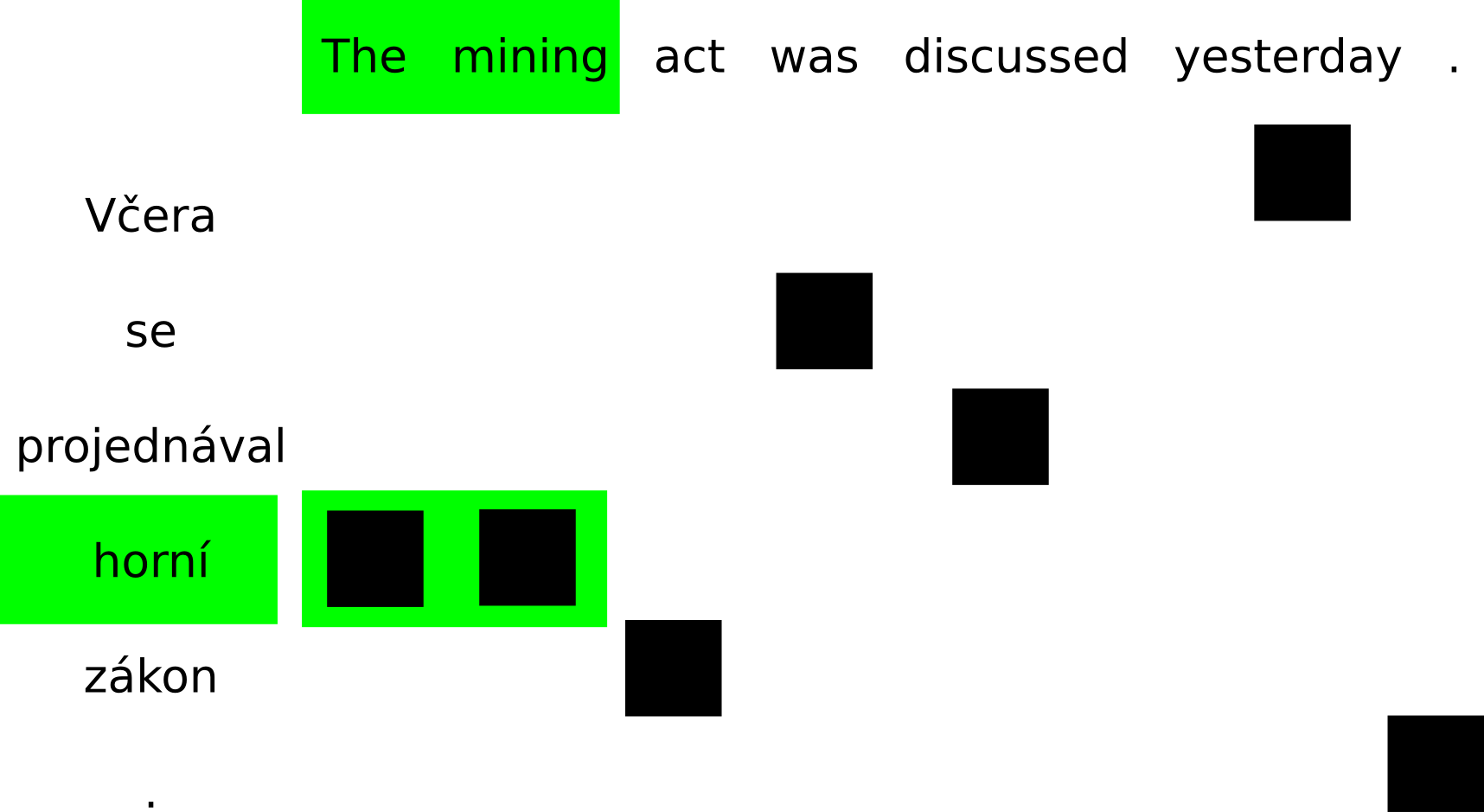

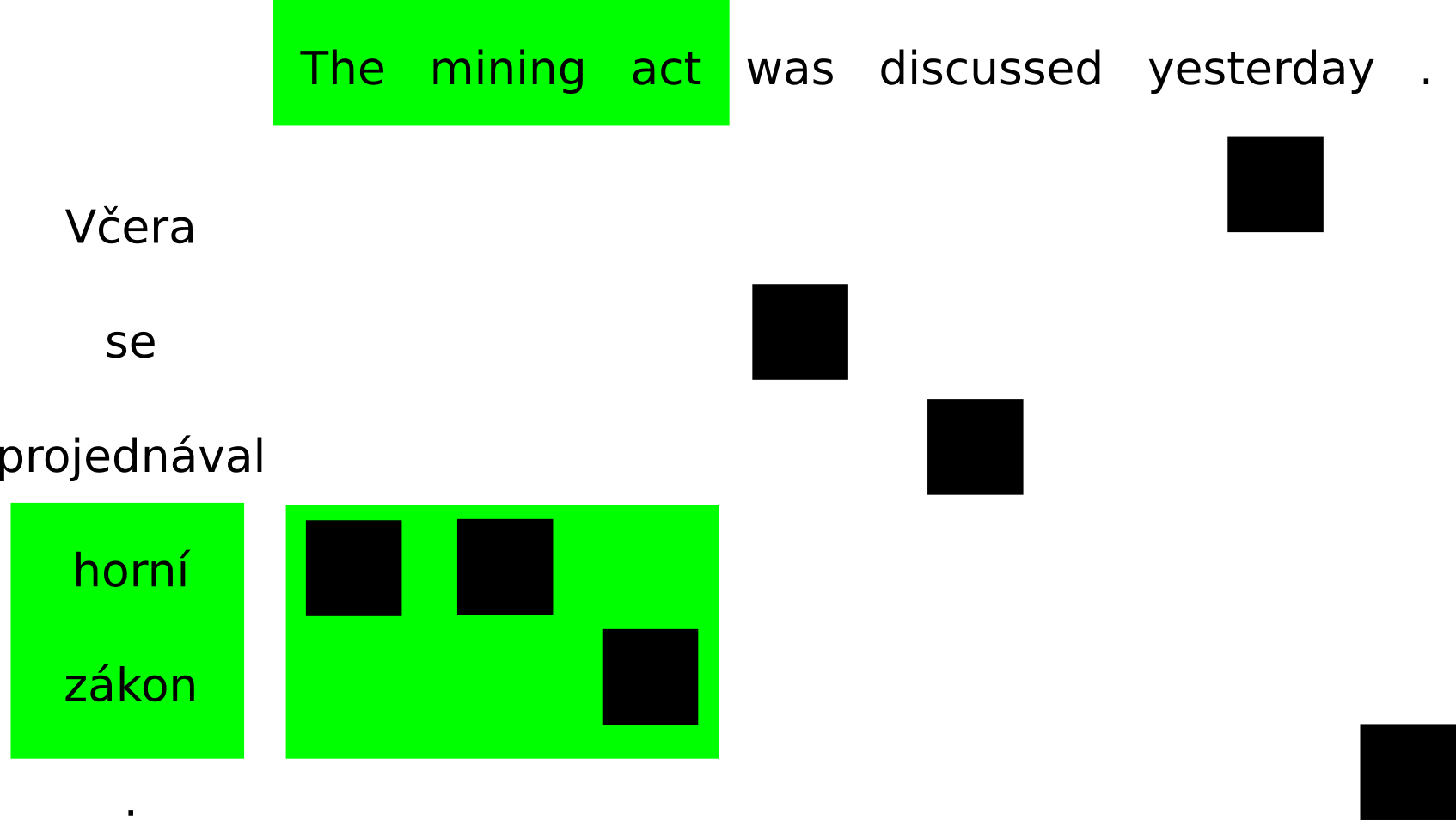

In order to obtain a phrase table (a probabilistic dictionary of phrases), we need word-aligned parallel data. Using the alignment links, a simple heuristic is applied to extract consistent phrase pairs. Consider the word-aligned example sentence:

Phrase pairs are contiguous spans where all alignment points from the source side of the span fall within its target side and vice versa. These are examples of phrases consistent with this word alignment:

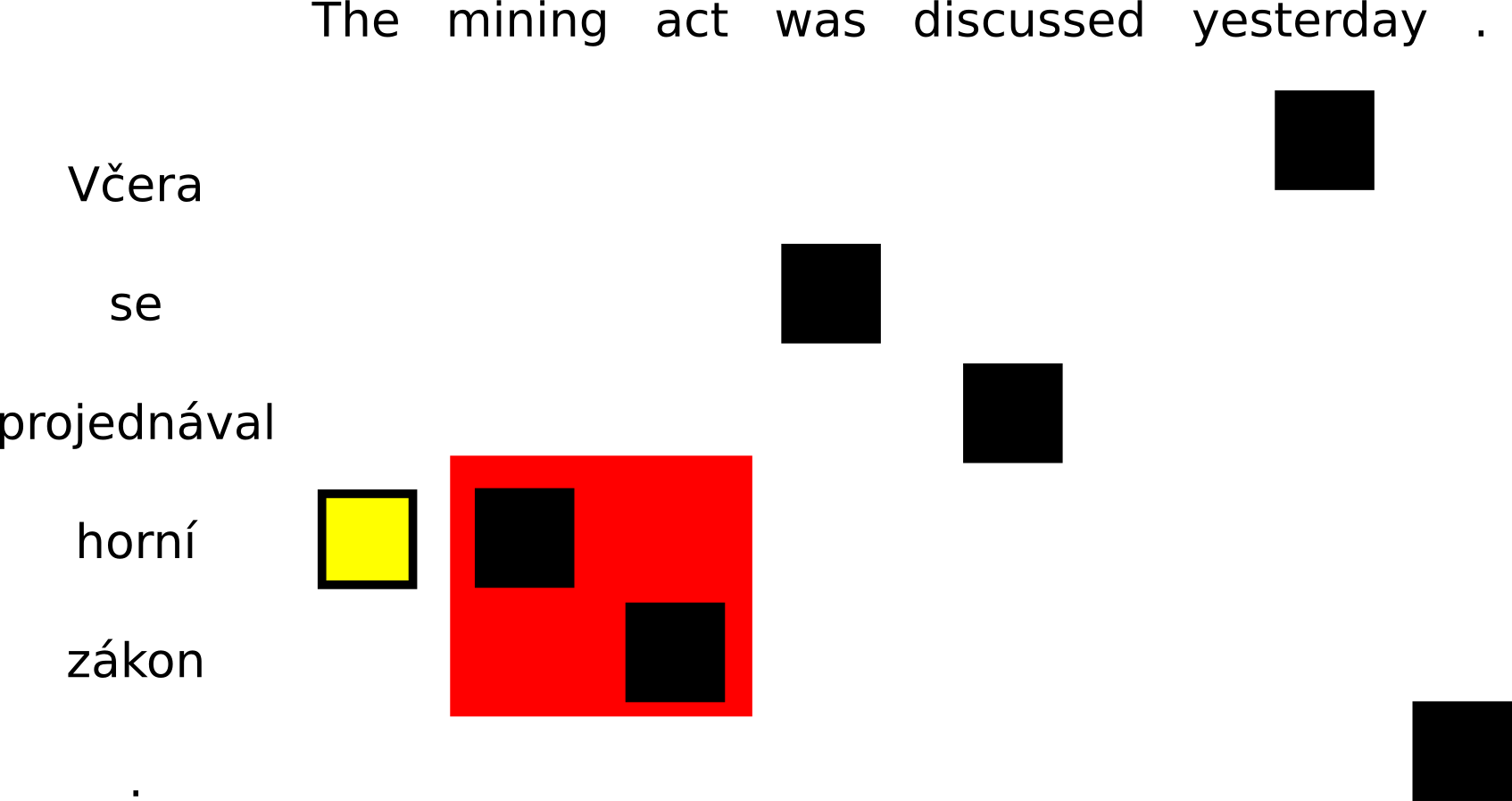

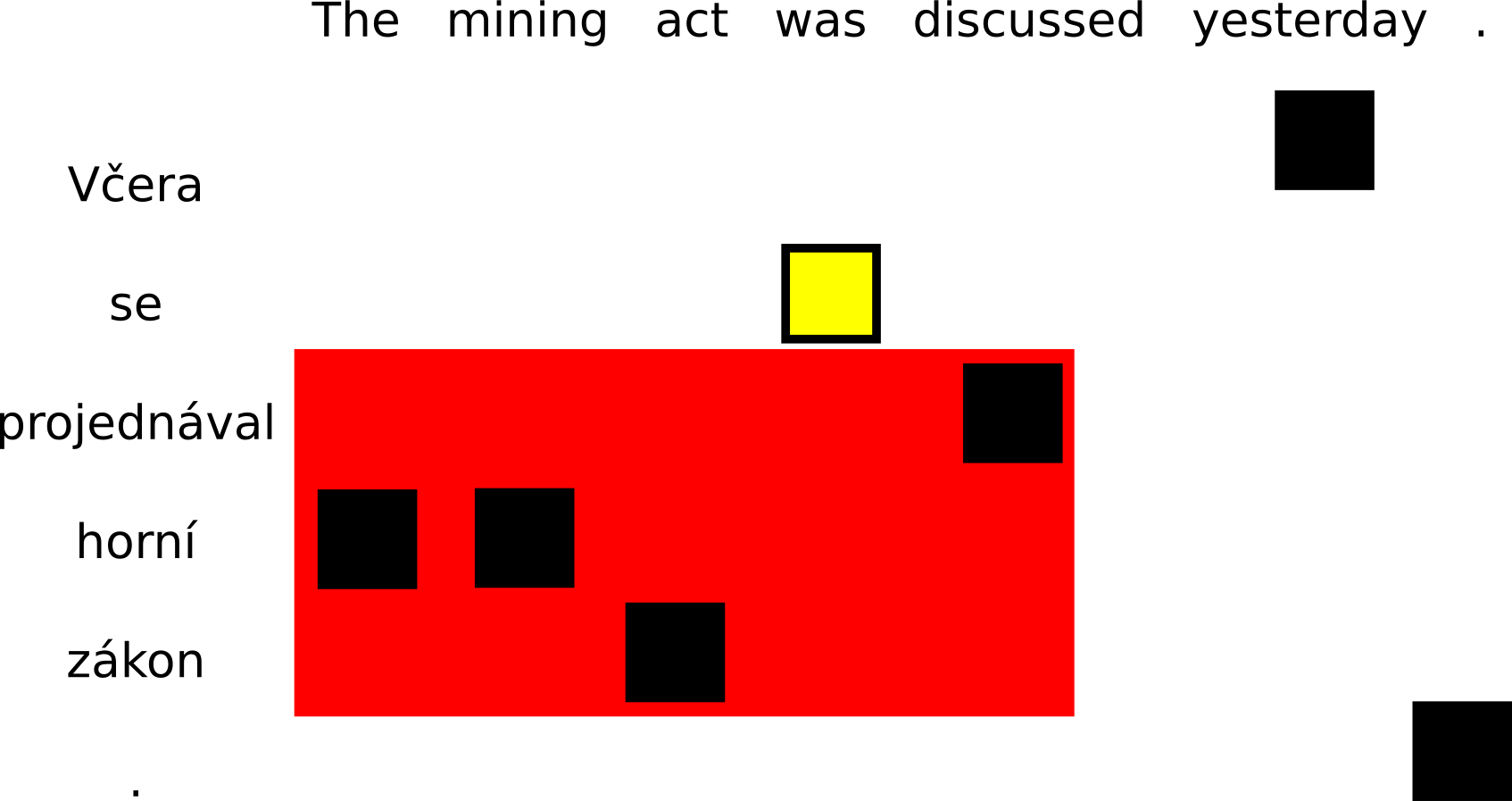

On the other hand, if either a source word or a target word is aligned outside of the current span, the phrase cannot be extracted. The conflicting alignment points are drawn in yellow:

Phrase Scoring

Once we have extracted all consistent phrase pairs from our training data, we can assign translation probabilities to them using maximum likelihood estimation. To estimate the probability of phrase being the translation of phrase , we simply count:

The formula tells us to simply count how many times we saw translated as in our training data and divide that by the number of times we saw in total.

In practice, other scores are also computed (e.g. ) but that's a topic for another lecture.