Word Alignment: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

No edit summary |

||

| Line 18: | Line 18: | ||

For example, if we look for translations of the Russian word "дом", we simply collect all ''alignment links'' in our data and estimate translation probabilities from them. In our tiny example, we get: | For example, if we look for translations of the Russian word "дом", we simply collect all ''alignment links'' in our data and estimate translation probabilities from them. In our tiny example, we get: | ||

<math>P(\text{ | <math>P(\text{house}|\text{dom}) = \frac{2}{2} = 1</math> | ||

<ref name="ibmmodels">Peter F. Brown, Stephen A. Della Pietra, Vincent J. Della Pietra, Robert L. Mercer ''[http://www.aclweb.org/anthology/J93-2003 The Mathematics of Statistical Machine Translation: Parameter Estimation]''</ref> | <ref name="ibmmodels">Peter F. Brown, Stephen A. Della Pietra, Vincent J. Della Pietra, Robert L. Mercer ''[http://www.aclweb.org/anthology/J93-2003 The Mathematics of Statistical Machine Translation: Parameter Estimation]''</ref> | ||

Revision as of 15:18, 24 March 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

| Exercises: | IBM-1 Alignment Model |

In the previous lecture, we saw how to find sentences in parallel data which correspond to each other. Now we move one step further and look for words which correspond to each other in our parallel sentences.

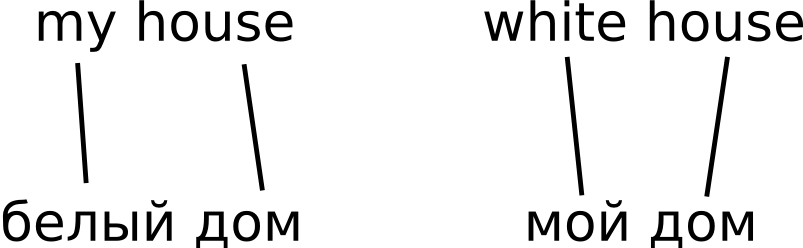

This task is usually called word alignment. Note that once we have solved it, we get a (probabilistic) translation dictionary for free. See our sample "parallel corpus":

For example, if we look for translations of the Russian word "дом", we simply collect all alignment links in our data and estimate translation probabilities from them. In our tiny example, we get:

Exercises

References

- ↑ Peter F. Brown, Stephen A. Della Pietra, Vincent J. Della Pietra, Robert L. Mercer The Mathematics of Statistical Machine Translation: Parameter Estimation