Automatic MT Evaluation: Difference between revisions

No edit summary |

No edit summary |

||

| Line 29: | Line 29: | ||

<math> | <math> | ||

\text{BLEU} = \text{BP} \cdot \exp \sum_{i=1}^{n}(\lambda_i log p_i) | \text{BLEU} = \text{BP} \cdot \exp \sum_{i=1}^{n}(\lambda_i \log p_i) | ||

</math> | </math> | ||

| Line 39: | Line 39: | ||

<math> | <math> | ||

\begin{equation} | |||

\text{BP} = \left\{ | \text{BP} = \left\{ | ||

\begin{array}{l l} | \begin{array}{l l} | ||

| Line 44: | Line 45: | ||

\exp(1-r/c) & \quad \text{if $c \leq r$}\\ | \exp(1-r/c) & \quad \text{if $c \leq r$}\\ | ||

\end{array} \right. | \end{array} \right. | ||

\end{equation} | |||

</math> | </math> | ||

Revision as of 10:14, 10 February 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Reference Translations

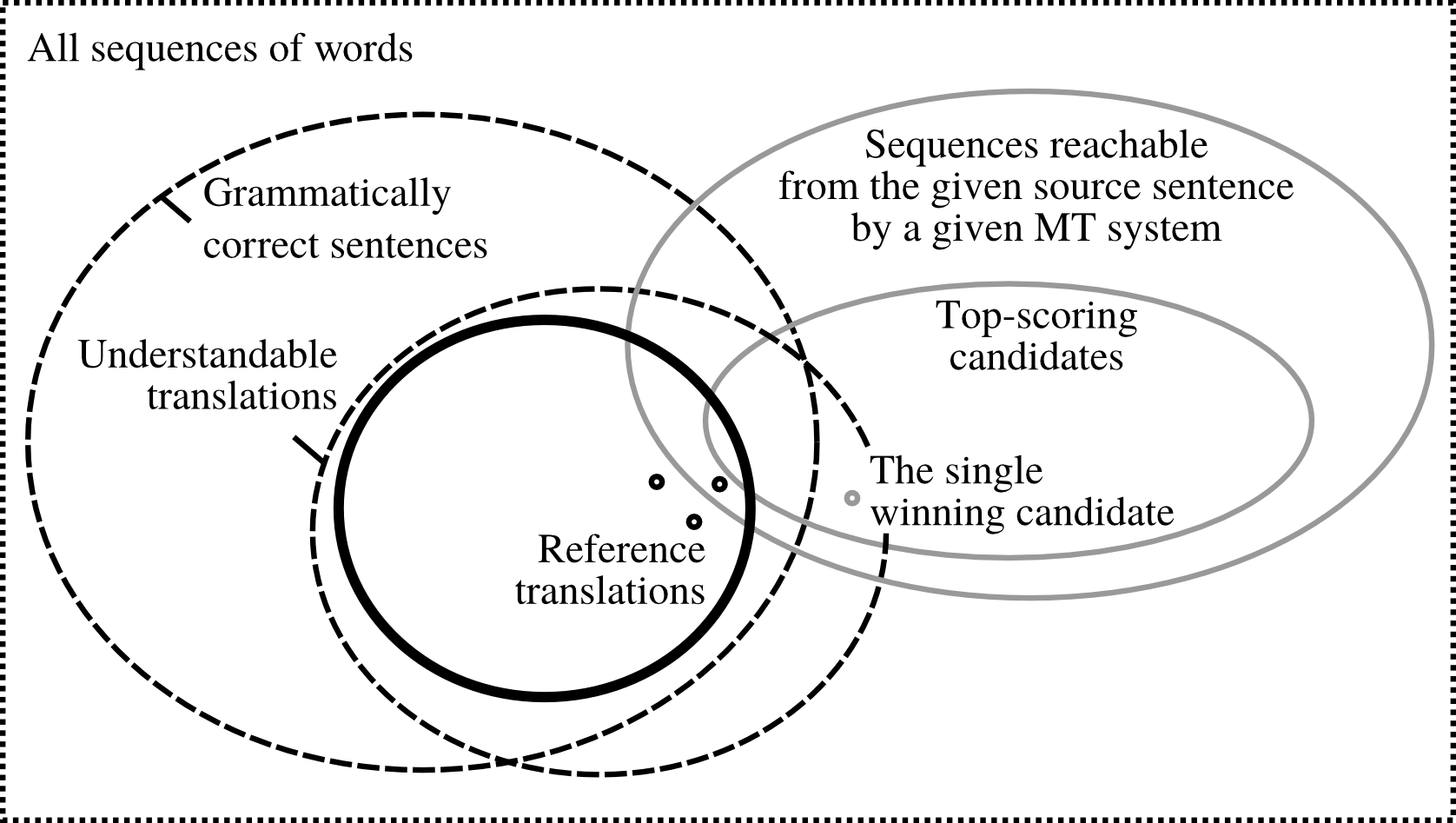

The following picture[1] illustrates the issue of reference translations:

Out of all possible sequences of words in the given language, only some are grammatically correct sentences (). An overlapping set is formed by understandable translations () of the source sentence (note that these are not necessarily grammatical). Possible reference translations can then be viewed as a subset of . Only some of these can be reached by the MT system. Typically, we only have several reference translations at our disposal; often we have just a single reference.

PER

Position-independent error rate[2] (PER) is a simple measure which counts the number of words which are identical in the MT output and the reference translation and divides

BLEU

BLEU[3] (Bilingual evaluation understudy) remains the most popular metric for automatic evaluation of MT output quality.

While PER only looks at individual words, BLEU considers also sequences of words. Informally, we can describe BLEU as the amount of overlap of -grams between the candidate translation and the reference (more specifically unigrams, bigrams, trigrams and 4-grams, in the standard formulation).

The formal definition is as follows:

Where (almost always) and . stand for -gram precision, i.e. the number of -grams in the candidate translation which are confirmed by the reference.

Each reference -gram can be used to confirm the candidate -gram only once (clipping), making it impossible to game BLEU by producing many occurrences of a single common word (such as "the").

BP stands for brevity penalty. Since BLEU is a kind of precision, short outputs (which only contain words that the system is sure about) would score highly without BP. This penalty is defined simply as:

Failed to parse (unknown function "\begin{equation}"): {\displaystyle \begin{equation} \text{BP} = \left\{ \begin{array}{l l} 1 & \quad \text{if $c > r$}\\ \exp(1-r/c) & \quad \text{if $c \leq r$}\\ \end{array} \right. \end{equation} }

Other Metrics

- Translation Error Rate (TER)

- METEOR

References

- ↑ Ondřej Bojar, Matouš Macháček, Aleš Tamchyna, Daniel Zeman. Scratching the Surface of Possible Translations

- ↑ C. Tillmann, S. Vogel, H. Ney, A. Zubiaga, H. Sawaf. Accelerated DP Based Search for Statistical Translation

- ↑ Kishore Papineni, Salim Roukos, Todd Ward, Wei-Jing Zhu. BLEU: a Method for Automatic Evaluation of Machine Translation