Automatic MT Evaluation: Difference between revisions

No edit summary |

No edit summary |

||

| Line 8: | Line 8: | ||

{{#ev:youtube|https://www.youtube.com/watch?v=Bj_Hxi91GUM&index=5&list=PLpiLOsNLsfmbeH-b865BwfH15W0sat02V|800|center}} | {{#ev:youtube|https://www.youtube.com/watch?v=Bj_Hxi91GUM&index=5&list=PLpiLOsNLsfmbeH-b865BwfH15W0sat02V|800|center}} | ||

== Reference Translations == | |||

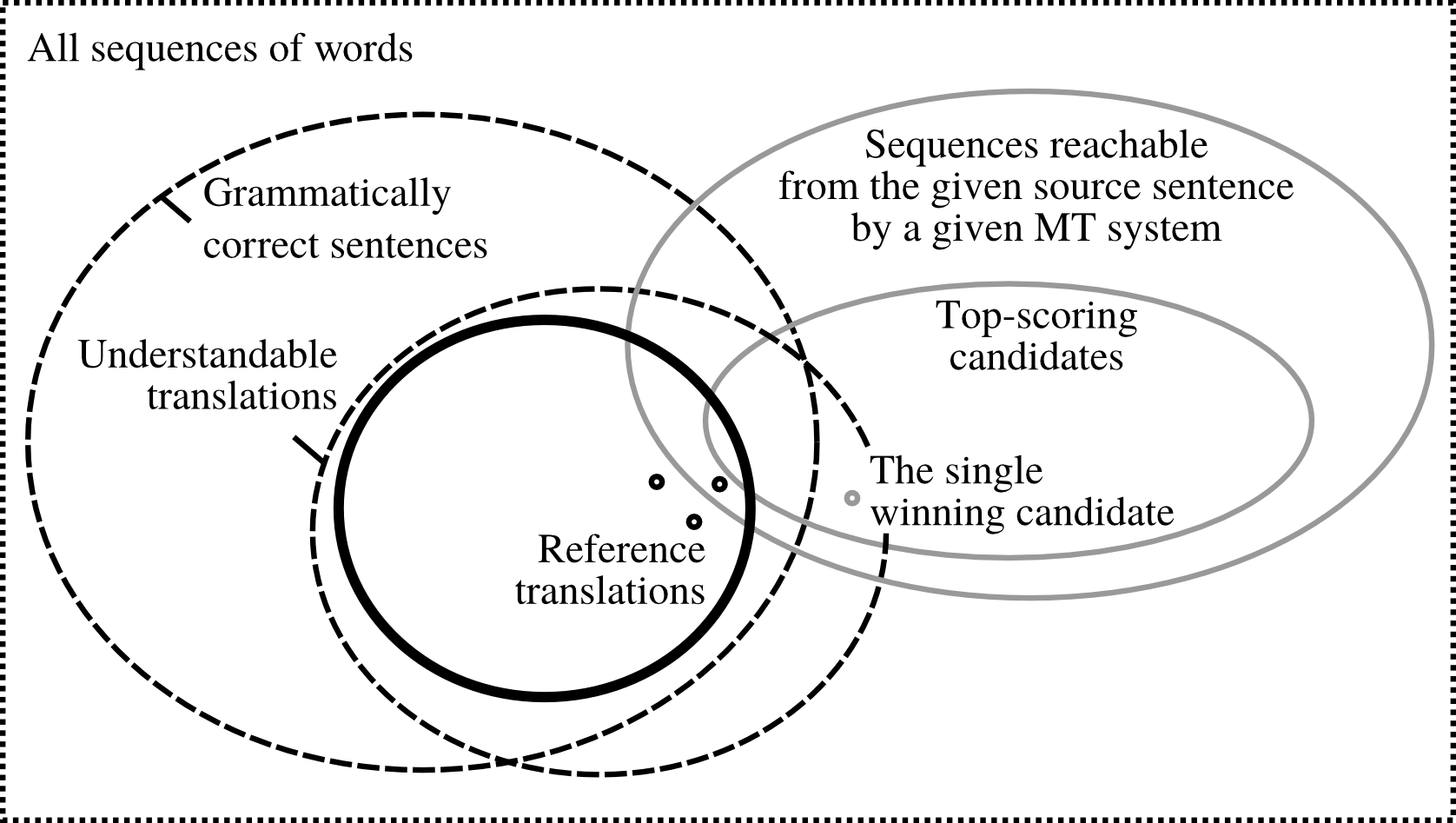

The following picture<ref name="deprefset">Ondřej Bojar, Matouš Macháček, Aleš Tamchyna, Daniel Zeman. ''[https://ufal.mff.cuni.cz/~tamchyna/papers/2013-tsd.pdf Scratching the Surface of Possible Translations]''</ref> illustrates the issue of reference translations: | |||

[[File:references.png|650px]] | |||

Out of all possible sequences of words in the given language, only some are ''grammatically correct sentences'' (<math>G</math>). An overlapping set is formed by ''understandable translations'' of the source sentence (note that these are not necessarily grammatical). Possible ''reference translations'' can then be viewed as a subset of the interse | |||

Despite this fact, when we train or evaluate translation systems, we often rely on just a single reference translation. | Despite this fact, when we train or evaluate translation systems, we often rely on just a single reference translation. | ||

Revision as of 17:47, 9 February 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Reference Translations

The following picture[1] illustrates the issue of reference translations:

Out of all possible sequences of words in the given language, only some are grammatically correct sentences (). An overlapping set is formed by understandable translations of the source sentence (note that these are not necessarily grammatical). Possible reference translations can then be viewed as a subset of the interse

Despite this fact, when we train or evaluate translation systems, we often rely on just a single reference translation.

Translation Evaluation Campaigns

There are several academic workshops where the quality of various translation systems is compared. Such "competitions" require manual evaluation. Their methodology evolves to make the results as fair and statistically sound as possible. The most prominent ones include:

Workshop on Statistical Machine Translation (WMT)

International Workshop on Spoken Language Translation (IWSLT)

References

- ↑ Ondřej Bojar, Matouš Macháček, Aleš Tamchyna, Daniel Zeman. Scratching the Surface of Possible Translations