Deep Syntax: Difference between revisions

(Created page with "{{Infobox |title = Lecture 14: Deep Syntax |image = 200px |label1 = Lecture video: |data1 = [http://example.com web '''TODO'''] <br/> [https://www.y...") |

|||

| (19 intermediate revisions by the same user not shown) | |||

| Line 8: | Line 8: | ||

== Functional Generative Description == | == Functional Generative Description == | ||

The functional generative description (FGD) is a linguistic theory developed by Petr Sgall in Prague in the 1960's. It formally describes the language as a system of layers, ranging from the most basic layers (phonology) to abstract ones (deep syntax/semantic -- the ''tectogrammatical layer''). The theory was developed with the intention to capture the language using a computer and indeed, much of the theory has been implemented as computer programs. However, the system of layers was gradually simplified and currently, only four layers are used (we refer to the annotation scheme for the Prague Dependency Treebank). | |||

== Prague Dependency Treebank == | |||

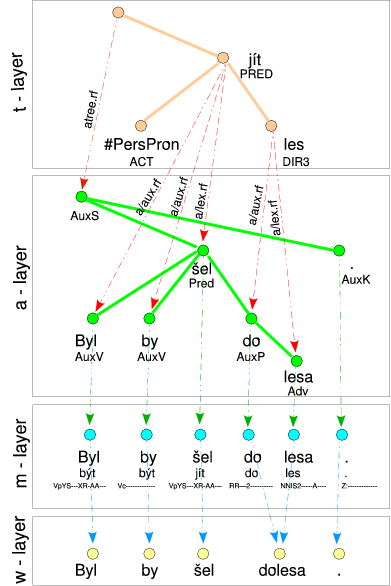

The Prague Dependency Treebank (PDT) is a corpus of Czech sentences manually annotated according to the FGD. An example of the layered description is shown on the following image (taken from PDT-2.0 documentation): | |||

[[File:i-layer-links.png|300px]] | |||

The lowest layer contains the sentence "as is", without any annotation. The m-layer provides a morphological analysis for each word (and also fixes typing errors). The a-layer is a dependency tree which describes the surface syntax of the sentence. Finally, the t-layer is a more abstract dependency tree which describes the deep syntax of the sentence. | |||

== VALLEX == | |||

One of the central notions in FGD and PDT is (verb) valency. Essentially, valency is the ability of verbs to require arguments (for example, most verbs require an actor, or subject, only some require an object etc.) VALLEX is a fine-grained valency dictionary of Czech verbs. The assumption underlying this dictionary is that different ''valency frames'' roughly correspond to different verb senses. | |||

== MT Using Deep Syntax: TectoMT == | |||

TectoMT is an implementation of the FGD framework for machine translation. It uses the analysis-transfer-synthesis approach and it was developed primarily for English-Czech translation, although recently is has been extended to support other languages such as Dutch, German or Basque. | |||

The input sentence is first analysed up to the tectogrammatical layer (deep syntax). This layer is assumed to be abstract enough that the structure of the dependency tree is language independent. This allows for the transfer phase to only "relabel" the tree nodes instead of doing full tree-to-tree transfer which would include structural transformations. Once a deep syntactic representation of the translation is produced, the generation phase proceeds to construct the surface representation in the target language. | |||

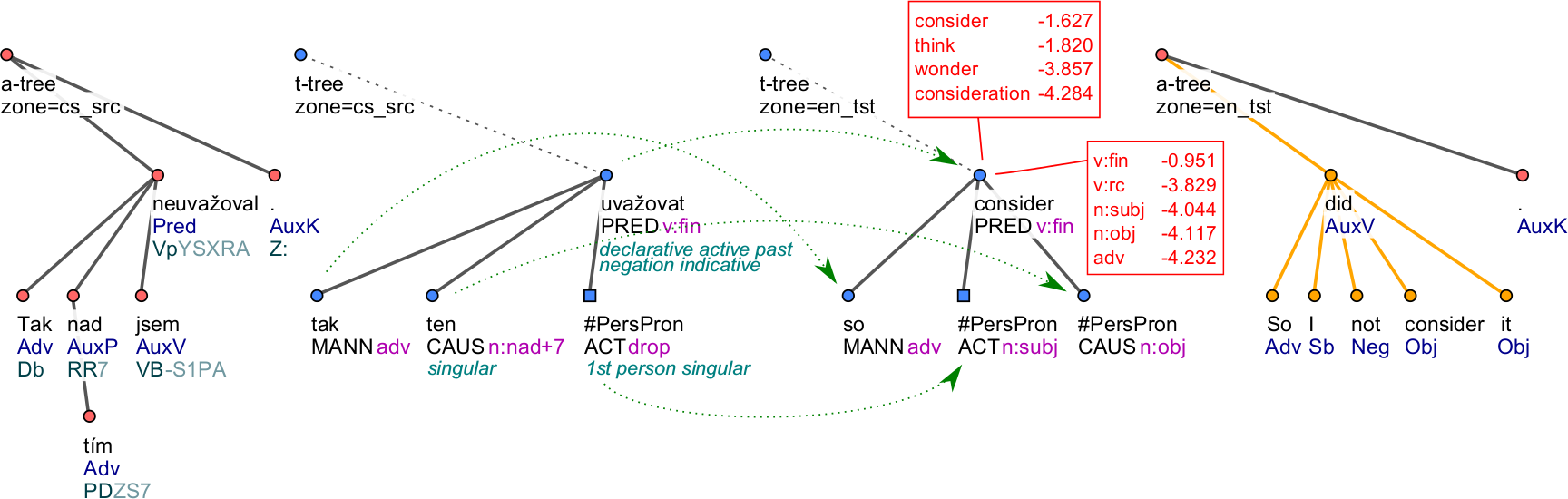

The following picture shows an example of Czech-English translation. | |||

[[File:tectomt-example.png|800px]] | |||

== Using Deep Syntax to Achieve State of the Art in MT == | |||

By itself, deep syntactic MT does not reach the performance of statistical methods (e.g. phrase-based). However, the outputs of TectoMT are usually grammatical sentences (as they are generated from a deep representation, preserving agreement constraints) and they can contain word forms not observed in the training data (thanks to its morphological generator). As such, they are a useful complement of the statistical systems. | |||

[http://ufal.mff.cuni.cz/chimera Chimera] is a system combination of a standard phrase-based MT system and TectoMT. Development and test data are translated using TectoMT and its outputs are added as a separate parallel corpus. An extra phrase table is extracted from this synthetic set and is added to Moses. The system can therefore choose to use either the standard parallel data or the outputs of TectoMT. Standard MERT is used to set the weights. | |||

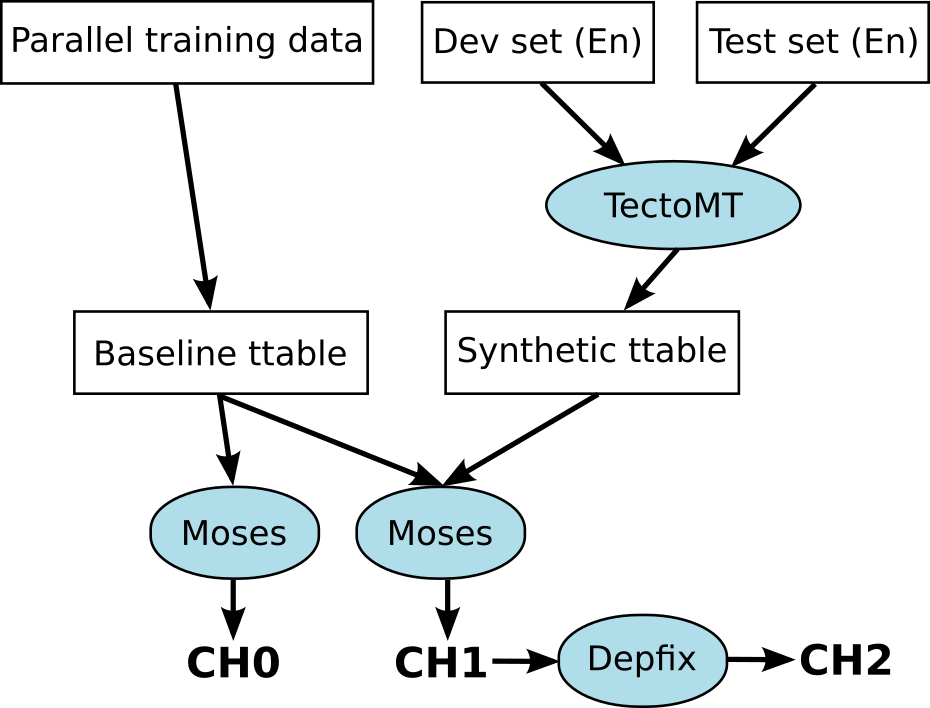

The following figure illustrates the structure of Chimera -- the final system is CH2 but CH1 (Moses+TectoMT but no automatic post-editing) and CH0 (only plain Moses) have also been evaluated. | |||

[[File:chimera.png|400px]] | |||

Chimera currently represents the state of the art in English-Czech MT; it was ranked first by human judges in three consecutive years of the WMT shared Translation Task (2013, 2014, 2015). | |||

The following table shows the improvements from adding extra data and from including the TectoMT outputs. The results suggest that TectoMT provides improvements which are complementary and which significantly help translation quality. The constrained setup used only 15 million parallel sentence pairs, as opposed to the full system with over 52 million training sentence pairs. In terms of monolingual data, the difference was 44 vs. 392 million sentences. | |||

{| class="wikitable" | |||

! | |||

! Constrained | |||

! Full | |||

! Delta | |||

|- | |||

!CH0 | |||

|21.28 | |||

|22.29 | |||

|1.31 | |||

|- | |||

!CH1 | |||

|23.37 | |||

|24.24 | |||

|0.87 | |||

|- | |||

!Delta | |||

|2.09 | |||

|1.65 | |||

| | |||

|} | |||

Latest revision as of 17:25, 17 November 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Functional Generative Description

The functional generative description (FGD) is a linguistic theory developed by Petr Sgall in Prague in the 1960's. It formally describes the language as a system of layers, ranging from the most basic layers (phonology) to abstract ones (deep syntax/semantic -- the tectogrammatical layer). The theory was developed with the intention to capture the language using a computer and indeed, much of the theory has been implemented as computer programs. However, the system of layers was gradually simplified and currently, only four layers are used (we refer to the annotation scheme for the Prague Dependency Treebank).

Prague Dependency Treebank

The Prague Dependency Treebank (PDT) is a corpus of Czech sentences manually annotated according to the FGD. An example of the layered description is shown on the following image (taken from PDT-2.0 documentation):

The lowest layer contains the sentence "as is", without any annotation. The m-layer provides a morphological analysis for each word (and also fixes typing errors). The a-layer is a dependency tree which describes the surface syntax of the sentence. Finally, the t-layer is a more abstract dependency tree which describes the deep syntax of the sentence.

VALLEX

One of the central notions in FGD and PDT is (verb) valency. Essentially, valency is the ability of verbs to require arguments (for example, most verbs require an actor, or subject, only some require an object etc.) VALLEX is a fine-grained valency dictionary of Czech verbs. The assumption underlying this dictionary is that different valency frames roughly correspond to different verb senses.

MT Using Deep Syntax: TectoMT

TectoMT is an implementation of the FGD framework for machine translation. It uses the analysis-transfer-synthesis approach and it was developed primarily for English-Czech translation, although recently is has been extended to support other languages such as Dutch, German or Basque.

The input sentence is first analysed up to the tectogrammatical layer (deep syntax). This layer is assumed to be abstract enough that the structure of the dependency tree is language independent. This allows for the transfer phase to only "relabel" the tree nodes instead of doing full tree-to-tree transfer which would include structural transformations. Once a deep syntactic representation of the translation is produced, the generation phase proceeds to construct the surface representation in the target language.

The following picture shows an example of Czech-English translation.

Using Deep Syntax to Achieve State of the Art in MT

By itself, deep syntactic MT does not reach the performance of statistical methods (e.g. phrase-based). However, the outputs of TectoMT are usually grammatical sentences (as they are generated from a deep representation, preserving agreement constraints) and they can contain word forms not observed in the training data (thanks to its morphological generator). As such, they are a useful complement of the statistical systems.

Chimera is a system combination of a standard phrase-based MT system and TectoMT. Development and test data are translated using TectoMT and its outputs are added as a separate parallel corpus. An extra phrase table is extracted from this synthetic set and is added to Moses. The system can therefore choose to use either the standard parallel data or the outputs of TectoMT. Standard MERT is used to set the weights.

The following figure illustrates the structure of Chimera -- the final system is CH2 but CH1 (Moses+TectoMT but no automatic post-editing) and CH0 (only plain Moses) have also been evaluated.

Chimera currently represents the state of the art in English-Czech MT; it was ranked first by human judges in three consecutive years of the WMT shared Translation Task (2013, 2014, 2015).

The following table shows the improvements from adding extra data and from including the TectoMT outputs. The results suggest that TectoMT provides improvements which are complementary and which significantly help translation quality. The constrained setup used only 15 million parallel sentence pairs, as opposed to the full system with over 52 million training sentence pairs. In terms of monolingual data, the difference was 44 vs. 392 million sentences.

| Constrained | Full | Delta | |

|---|---|---|---|

| CH0 | 21.28 | 22.29 | 1.31 |

| CH1 | 23.37 | 24.24 | 0.87 |

| Delta | 2.09 | 1.65 |