MT Talks: Difference between revisions

No edit summary |

(a paragraph on NMT) |

||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 8: | Line 8: | ||

By the way, this is indeed a Wiki, so your contributions are very welcome! Please register and feel free to add comments, corrections or links to useful resources. | By the way, this is indeed a Wiki, so your contributions are very welcome! Please register and feel free to add comments, corrections or links to useful resources. | ||

== Relation to Neural MT == | |||

MT Talks were created '''before''' neural MT (NMT) was seriously considered. Some of the talks have thus lost their relevance when describing pre-neural solutions and some problems (e.g. morphological richness) have become substantially less severe. | |||

For an example of top-performing neural MT systems, see e.g. our [http://lindat.cz/services/translation/ Demo at Lindat]. | |||

== Our Talks == | == Our Talks == | ||

01 '''[[Intro]]''': Why is MT difficult, | 01 '''[[Intro]]''': Why is MT difficult, approaches to MT. | ||

02 '''[[MT that Deceives]]''': Serious translation errors even for short and simple inputs. | 02 '''[[MT that Deceives]]''': Serious translation errors even for short and simple inputs. | ||

| Line 29: | Line 35: | ||

09 '''[[Phrase-based Model]]''': Copy if you can. | 09 '''[[Phrase-based Model]]''': Copy if you can. | ||

10 '''[[Constituency Trees]]''' | 10 '''[[Constituency Trees]]''': Divide and conquer. | ||

11 '''[[Dependency Trees]]''': Trees with gaps. | |||

12 '''[[Rich Vocabulary]]''': Rindfleischetikettierungsüberwachungsaufgabenübertragungsgesetz. | |||

13 '''[[Scoring and Optimization]]''': Features your model features. | |||

14 '''[[Deep Syntax]]''': Prague Family Jewels. | |||

<!-- | |||

== CodEx – Coding Exercises == | == CodEx – Coding Exercises == | ||

| Line 41: | Line 52: | ||

* [[CodEx-Introduction|Brief description of CodEx]]: how to get an account and submit a solution. | * [[CodEx-Introduction|Brief description of CodEx]]: how to get an account and submit a solution. | ||

* [[CodEx - Important Notes|Important Notes]] on technical issues | * [[CodEx - Important Notes|Important Notes]] on technical issues | ||

--> | |||

== Contributing == | == Contributing == | ||

Latest revision as of 11:01, 6 November 2019

MT Talks is a series of mini-lectures on machine translation.

Our goal is to hit just the right level of detail and technicality to make the talks interesting and attractive to people who are not yet familiar with the field but mix in new observations and insights so that even old pals will have a reason to watch us.

MT Talks and the expanded notes on this wiki will never be the ultimate resource for MT, but we would be very happy to serve as an ultimate commented directory of good pointers.

By the way, this is indeed a Wiki, so your contributions are very welcome! Please register and feel free to add comments, corrections or links to useful resources.

Relation to Neural MT

MT Talks were created before neural MT (NMT) was seriously considered. Some of the talks have thus lost their relevance when describing pre-neural solutions and some problems (e.g. morphological richness) have become substantially less severe.

For an example of top-performing neural MT systems, see e.g. our Demo at Lindat.

Our Talks

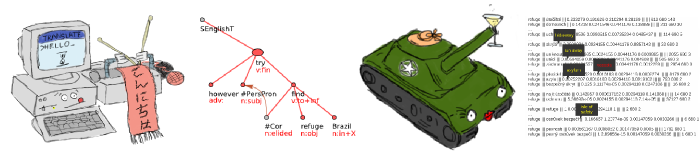

01 Intro: Why is MT difficult, approaches to MT.

02 MT that Deceives: Serious translation errors even for short and simple inputs.

03 Pre-processing: Normalization and other technical tricks bound to help your MT system.

04 MT Evaluation in General: Techniques of judging MT quality, dimensions of translation quality, number of possible translations.

05 Automatic MT Evaluation: Two common automatic MT evaluation methods: PER and BLEU

06 Data Acquisition: The need and possible sources of training data for MT. And the diminishing utility of the new data additions due to Zipf's law.

07 Sentence Alignment: An introduction to the Gale & Church sentence alignment algorithm.

08 Word Alignment: Cutting the chicken-egg problem.

09 Phrase-based Model: Copy if you can.

10 Constituency Trees: Divide and conquer.

11 Dependency Trees: Trees with gaps.

12 Rich Vocabulary: Rindfleischetikettierungsüberwachungsaufgabenübertragungsgesetz.

13 Scoring and Optimization: Features your model features.

14 Deep Syntax: Prague Family Jewels.

Contributing

Due to spamming, we had to restrict permissions for editing the Wiki. If you're interested in contributing, please write an email to tamchyna -at- ufal.mff.cuni.cz to obtain a username.

Other Videolectures on MT

- Approaches to Machine Translation: Rule-Based, Statistical, Hybrid (an online course on MT by UPC Barcelona)

- Natural Language Processing at Coursera by Michael Collins, includes lectures on word-based and phrase-based models. Further notes

- TAUS Machine Translation and Moses Tutorial (a series of commented slides, MT overview and practical aspects of the Moses Toolkit)

Acknowledgement

The work on this project has been supported by the grant FP7-ICT-2011-7-288487 (MosesCore).