MT Evaluation in General: Difference between revisions

No edit summary |

No edit summary |

||

| (27 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

|image = [[File:worker.png|200px]] | |image = [[File:worker.png|200px]] | ||

|label1 = Lecture video: | |label1 = Lecture video: | ||

|data1 = [http://example.com web '''TODO'''] <br/> [ | |data1 = [http://example.com web '''TODO'''] <br/> [https://www.youtube.com/watch?v=kSVb4-xI0Fw&index=4&list=PLpiLOsNLsfmbeH-b865BwfH15W0sat02V Youtube] | ||

}} | }} | ||

{{#ev:youtube| | {{#ev:youtube|https://www.youtube.com/watch?v=kSVb4-xI0Fw&index=4&list=PLpiLOsNLsfmbeH-b865BwfH15W0sat02V|800|center}} | ||

== Data Splits == | == Data Splits == | ||

Available training data is usually split into several parts, e.g. | Available training data is usually split into several parts, e.g. '''training''', '''development''' (held-out) and '''(dev-)test'''. Training data is used to estimate model parameters, development set can be used for model selection, hyperparameter tuning etc. and dev-test is used for continuous evaluation of progress (are we doing better than before?). | ||

However, you should always keep an additional | However, you should always keep an additional '''(final) test set''' which is used only very rarely. Evaluating your system on the final test set can then be used as a rough estimate of its true performance because you do not use it in the development process at all, and therefore do not bias your system towards it. | ||

The "golden rule" of (MT) evaluation: | The "golden rule" of (MT) evaluation: '''Evaluate on unseen data!''' | ||

== Approaches to Evaluation == | |||

Let us first introduce the example that we will use throughout the section: | |||

=== Example Sentence + Translations === | |||

Original German sentence: | |||

: ''Arbeiter sturzte von Leiter: schwer verletzt'' | |||

English reference translation: | |||

: ''Worker falls from ladder: seriously injured'' | |||

{| | |||

! Translation Candidate | |||

! Notes | |||

|- | |||

| '''A''' ''Workers rushed from director: Seriously injured'' | |||

| plural (workers), bad choice of verb (rushed), ''Leiter'' mistranslated as ''director'' | |||

|- | |||

| '''B''' ''Workers fell from ladder: hurt'' | |||

| plural (workers), intensifier missing | |||

|- | |||

| '''C''' ''Worker rushed from ladder: schwer verletzt'' | |||

| bad choice of verb (rushed), tail is left untranslated | |||

|- | |||

| '''D''' ''Worker fell from leader: heavily injures'' | |||

| ''Leiter'' translated as ''leader'' (not a typo, a bad lexical choice), poor morphological choices | |||

|} | |||

=== Absolute Ranking === | |||

We put each translation into a category that best describes its quality. The following categories can be used: | |||

{| | |||

| '''Worth publishing''' | |||

| Translation is almost perfect, can be published as-is. | |||

|- | |||

| '''Worth editing''' | |||

| Translation contains minor errors which can be quickly fixed by a human post-editor. | |||

|- | |||

| '''Worth reading''' | |||

| Translation contains major errors but can be used for rough understanding of the text (''gisting''). | |||

|} | |||

If we define our categories like this, probably all example translations fall in the ''worth editing'' bin. | |||

We can also separate our assessment of translation quality into different aspects (or dimensions). One division that has been used extensively for MT evaluation is: | |||

* '''Adequacy''' -- how faithfully does the translation capture the meaning of the source sentence | |||

* '''Fluency''' -- is the translation a grammatical, fluent sentence in the target language? (regardless of meaning) | |||

In this case, e.g. candidate '''A''' could be marked as ''worth publishing'' in terms of fluency, while it is ''worth reading'' at best in terms of adequacy. | |||

=== Relative Ranking === | |||

In this case, we avoid assigning translations into categories and instead ask the human judge(s) to rank the possible translations relative to one another. (Human [http://en.wikipedia.org/wiki/Inter-rater_reliability inter-annotator agreement] can be surprisingly low in both scenarios, though.) | |||

In our example, we would probably order the systems (from best to worst): '''B > D > C > A''' | |||

Different annotators could come up with different rankings. Ranking can also differ according to the intended '''purpose''' of the translations -- if a human translator is supposed to post-edit the translation, major errors in adequacy (such as spurious/missing negation) might be easy to fix and therefore such translations could be ranked higher than factually correct translations with lots of small errors. | |||

== Dimensions of Translation Quality == | |||

Multidimensional Quality Metrics (MQM <ref name="mqm">Arle Richard Lommel, Aljoscha Burchardt, Hans Uszkoreit. ''[http://www.mt-archive.info/10/Aslib-2013-Lommel.pdf Multidimensional Quality Metrics: A Flexible System for Assessing Translation Quality]''</ref>) provides probably the greatest level of detail for various aspects (or dimensions) of translation quality: | |||

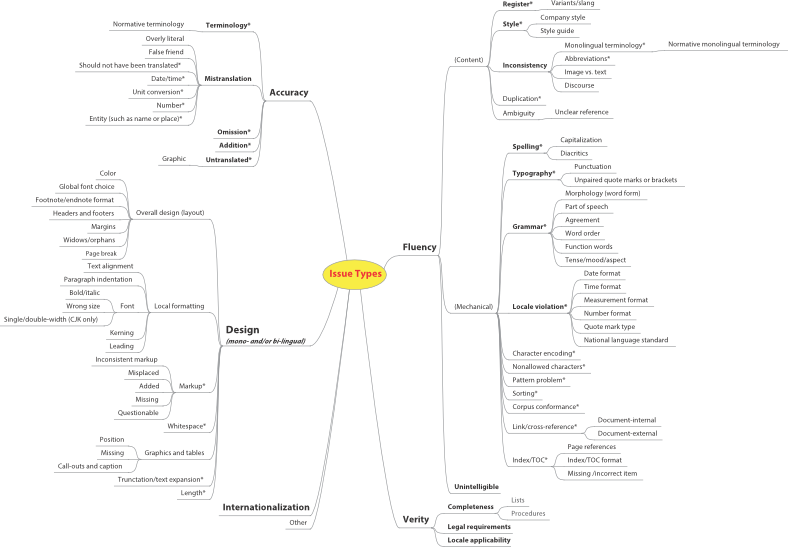

[[File:mqm.png|800px]] | |||

== Space of Possible Translations == | |||

An inherent issue with MT evaluation is the fact that there is usually more than one correct translation. In fact, several experiments<ref name="deprefset">Ondřej Bojar, Matouš Macháček, Aleš Tamchyna, Daniel Zeman. ''[https://ufal.mff.cuni.cz/~tamchyna/papers/2013-tsd.pdf Scratching the Surface of Possible Translations]''</ref><ref name="hyter">Markus Dreyer, Daniel Marcu. ''[http://www.aclweb.org/anthology/N12-1017 HyTER: Meaning-Equivalent Semantics for Translation Evaluation]''</ref> show that there can be as many as hundreds of thousands or even millions of correct translations per a single sentence. | |||

Such a high number of possible translations is mainly caused by the flexibility of lexical choice and word order. (In our example, the German word "''Arbeiter''" can be translated into English as "''worker''" or "''employee''".) Every such decision multiplies the number of translations, which thus grows exponentially. | |||

Despite this fact, when we train or evaluate translation systems, we often rely on just a single reference translation. | |||

== Translation Evaluation Campaigns == | |||

There are several academic workshops where the quality of various translation systems is compared. Such "competitions" require manual evaluation. Their methodology evolves to make the results as fair and statistically sound as possible. The most prominent ones include: | |||

[http://www.statmt.org/wmt14/ Workshop on Statistical Machine Translation (WMT)] | |||

[http://workshop2014.iwslt.org/ International Workshop on Spoken Language Translation (IWSLT)] | |||

== References == | |||

<references/> | |||

Latest revision as of 17:42, 9 February 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

Data Splits

Available training data is usually split into several parts, e.g. training, development (held-out) and (dev-)test. Training data is used to estimate model parameters, development set can be used for model selection, hyperparameter tuning etc. and dev-test is used for continuous evaluation of progress (are we doing better than before?).

However, you should always keep an additional (final) test set which is used only very rarely. Evaluating your system on the final test set can then be used as a rough estimate of its true performance because you do not use it in the development process at all, and therefore do not bias your system towards it.

The "golden rule" of (MT) evaluation: Evaluate on unseen data!

Approaches to Evaluation

Let us first introduce the example that we will use throughout the section:

Example Sentence + Translations

Original German sentence:

- Arbeiter sturzte von Leiter: schwer verletzt

English reference translation:

- Worker falls from ladder: seriously injured

| Translation Candidate | Notes |

|---|---|

| A Workers rushed from director: Seriously injured | plural (workers), bad choice of verb (rushed), Leiter mistranslated as director |

| B Workers fell from ladder: hurt | plural (workers), intensifier missing |

| C Worker rushed from ladder: schwer verletzt | bad choice of verb (rushed), tail is left untranslated |

| D Worker fell from leader: heavily injures | Leiter translated as leader (not a typo, a bad lexical choice), poor morphological choices |

Absolute Ranking

We put each translation into a category that best describes its quality. The following categories can be used:

| Worth publishing | Translation is almost perfect, can be published as-is. |

| Worth editing | Translation contains minor errors which can be quickly fixed by a human post-editor. |

| Worth reading | Translation contains major errors but can be used for rough understanding of the text (gisting). |

If we define our categories like this, probably all example translations fall in the worth editing bin.

We can also separate our assessment of translation quality into different aspects (or dimensions). One division that has been used extensively for MT evaluation is:

- Adequacy -- how faithfully does the translation capture the meaning of the source sentence

- Fluency -- is the translation a grammatical, fluent sentence in the target language? (regardless of meaning)

In this case, e.g. candidate A could be marked as worth publishing in terms of fluency, while it is worth reading at best in terms of adequacy.

Relative Ranking

In this case, we avoid assigning translations into categories and instead ask the human judge(s) to rank the possible translations relative to one another. (Human inter-annotator agreement can be surprisingly low in both scenarios, though.)

In our example, we would probably order the systems (from best to worst): B > D > C > A

Different annotators could come up with different rankings. Ranking can also differ according to the intended purpose of the translations -- if a human translator is supposed to post-edit the translation, major errors in adequacy (such as spurious/missing negation) might be easy to fix and therefore such translations could be ranked higher than factually correct translations with lots of small errors.

Dimensions of Translation Quality

Multidimensional Quality Metrics (MQM [1]) provides probably the greatest level of detail for various aspects (or dimensions) of translation quality:

Space of Possible Translations

An inherent issue with MT evaluation is the fact that there is usually more than one correct translation. In fact, several experiments[2][3] show that there can be as many as hundreds of thousands or even millions of correct translations per a single sentence.

Such a high number of possible translations is mainly caused by the flexibility of lexical choice and word order. (In our example, the German word "Arbeiter" can be translated into English as "worker" or "employee".) Every such decision multiplies the number of translations, which thus grows exponentially.

Despite this fact, when we train or evaluate translation systems, we often rely on just a single reference translation.

Translation Evaluation Campaigns

There are several academic workshops where the quality of various translation systems is compared. Such "competitions" require manual evaluation. Their methodology evolves to make the results as fair and statistically sound as possible. The most prominent ones include:

Workshop on Statistical Machine Translation (WMT)

International Workshop on Spoken Language Translation (IWSLT)

References

- ↑ Arle Richard Lommel, Aljoscha Burchardt, Hans Uszkoreit. Multidimensional Quality Metrics: A Flexible System for Assessing Translation Quality

- ↑ Ondřej Bojar, Matouš Macháček, Aleš Tamchyna, Daniel Zeman. Scratching the Surface of Possible Translations

- ↑ Markus Dreyer, Daniel Marcu. HyTER: Meaning-Equivalent Semantics for Translation Evaluation