Word Alignment: Difference between revisions

No edit summary |

No edit summary |

||

| (19 intermediate revisions by the same user not shown) | |||

| Line 10: | Line 10: | ||

{{#ev:youtube|https://www.youtube.com/watch?v=mqyMDLu5JPw|800|center}} | {{#ev:youtube|https://www.youtube.com/watch?v=mqyMDLu5JPw|800|center}} | ||

In the [[Sentence Alignment|previous lecture]], we saw how to find sentences in parallel data which correspond to each other. Now we move one step further and look for '''words''' which correspond to each other in our parallel sentences. | |||

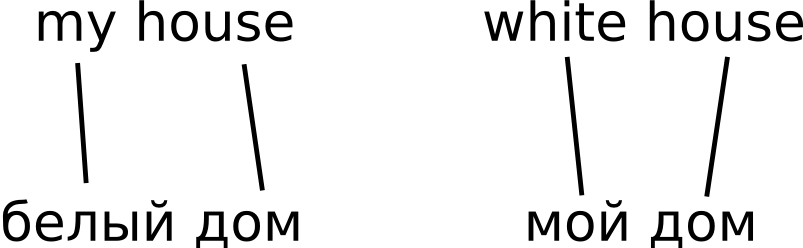

This task is usually called '''word alignment'''. Note that once we have solved it, we get a (probabilistic) translation dictionary for free. See our sample "parallel corpus": | |||

[[File:word-alignment.png|300px]] | |||

For example, if we look for translations of the Russian word "дом", we simply collect all its ''alignment links'' (lines leading from the word "дом") in our data and estimate translation probabilities from them. In our tiny example, we get P("house"|"дом") = 2/2 = 1. | |||

== IBM Model 1 == | |||

The so-called IBM models<ref name="ibmmodels">Peter F. Brown, Stephen A. Della Pietra, Vincent J. Della Pietra, Robert L. Mercer ''[http://www.aclweb.org/anthology/J93-2003 The Mathematics of Statistical Machine Translation: Parameter Estimation]''</ref> are the best-known and still the most commonly used approach to word alignment. In our lecture, we only go through the first, simplest model. | |||

The algorithm for IBM-1 only looks at ''lexical translation probability''. So basically, our goal is to fill in a matrix which says for each target word <math>w_t</math> and source word <math>w_s</math> what is the probability <math>P(w_t|w_s)</math>. Once we have this dictionary, we can get the actual alignments by finding the most probable target word for each source word (just in the case of IBM-1) and drawing a link there. | |||

The learning algorithm is an instance of [http://en.wikipedia.org/wiki/Expectation%E2%80%93maximization_algorithm Expectation-maximization]. In general, this is an iterative procedure which alternates between two steps until convergence: | |||

# '''E-step''': apply current model parameters to the data | |||

# '''M-step''': estimate new model parameters from the data | |||

Initially, all of our translation probabilities are uniform. | |||

In the '''expectation step''', we apply them to our data, i.e. we draw alignment links. Assuming our current sentence contains <math>T</math> target words, we draw a link of "weight" <math>1/T</math> (because our initial probability is uniform) from each source word to each target word. And for each source word, we remember that we have seen it aligned to each of the target words in the sentence <math>1/T</math> times. That is, we collect ''fractional counts''. | |||

Now assume that we have gone through our whole corpus. We have seen a given source word <math>s_i</math> with some target words <math>t_{j_1},\ldots,t_{j_n}</math> some (fractional) number of times. Now in the '''maximization step''', we turn these fractional counts into probabilities simply by normalizing them (this is the optimal thing to do, we could formally derive that this is the [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood estimate] for this model): | |||

<math>P(t_{j_1}|s_i) = \frac{\text{count}(t_{j_1}, s_i)}{\sum_{t'} \text{count}(t', s_i)}</math> | |||

The words that occurred frequently with our source word <math>s_i</math> will have a higher fractional count, and therefore a higher translation probability. | |||

In the next iteration, we apply our newly learned probabilities to our data, collect the fractional counts etc. We can run this algorithm for a limited number of iterations or check for convergence (the conditional probabilities stop changing). In this simple model, we are guaranteed to find a solution which is globally optimal for our data. | |||

=== Model Limitations === | |||

IBM Model 1 only looks at ''lexical translation probability''. It has no notion of '''word position''' so it is happy to align two neighboring words in the source sentence to two completely different positions in the target. It also disregards how many alignment links lead to a particular word (it does not model the so-called '''word fertility'''), so in some cases, it can even align the whole source sentence to a single target-side word, leaving other target words unaligned. Higher IBM models address these limitations with probability estimates of distortion and fertility. | |||

== Exercises == | == Exercises == | ||

* [https://codex3.ms.mff.cuni.cz/codex-trans/?groupId=3&taskId=11&module=groups%2Ftasks&page=specification Implement IBM-1 word alignment] | * [https://codex3.ms.mff.cuni.cz/codex-trans/?groupId=3&taskId=11&module=groups%2Ftasks&page=specification Implement IBM-1 word alignment] | ||

== See Also == | |||

* Adam Lopez' [http://lectures.ms.mff.cuni.cz/video/recordshow/index/44/172 lecture] on IBM model 1 and the accompanying [http://ufal.mff.cuni.cz/mtm13/files/05-word-alignment-adam-lopez.pdf slides] | |||

* Philipp Koehn's [http://www.statmt.org/book/slides/04-word-based-models.pdf#page=30 slides] (the slides contain the pseudo-code for IBM1) | |||

== References == | == References == | ||

<references /> | <references /> | ||

Latest revision as of 10:07, 25 March 2015

| |

| Lecture video: |

web TODO Youtube |

|---|---|

| Exercises: | IBM-1 Alignment Model |

{{#ev:youtube|https://www.youtube.com/watch?v=mqyMDLu5JPw%7C800%7Ccenter}}

In the previous lecture, we saw how to find sentences in parallel data which correspond to each other. Now we move one step further and look for words which correspond to each other in our parallel sentences.

This task is usually called word alignment. Note that once we have solved it, we get a (probabilistic) translation dictionary for free. See our sample "parallel corpus":

For example, if we look for translations of the Russian word "дом", we simply collect all its alignment links (lines leading from the word "дом") in our data and estimate translation probabilities from them. In our tiny example, we get P("house"|"дом") = 2/2 = 1.

IBM Model 1

The so-called IBM models[1] are the best-known and still the most commonly used approach to word alignment. In our lecture, we only go through the first, simplest model.

The algorithm for IBM-1 only looks at lexical translation probability. So basically, our goal is to fill in a matrix which says for each target word and source word what is the probability . Once we have this dictionary, we can get the actual alignments by finding the most probable target word for each source word (just in the case of IBM-1) and drawing a link there.

The learning algorithm is an instance of Expectation-maximization. In general, this is an iterative procedure which alternates between two steps until convergence:

- E-step: apply current model parameters to the data

- M-step: estimate new model parameters from the data

Initially, all of our translation probabilities are uniform.

In the expectation step, we apply them to our data, i.e. we draw alignment links. Assuming our current sentence contains target words, we draw a link of "weight" (because our initial probability is uniform) from each source word to each target word. And for each source word, we remember that we have seen it aligned to each of the target words in the sentence times. That is, we collect fractional counts.

Now assume that we have gone through our whole corpus. We have seen a given source word with some target words some (fractional) number of times. Now in the maximization step, we turn these fractional counts into probabilities simply by normalizing them (this is the optimal thing to do, we could formally derive that this is the maximum likelihood estimate for this model):

The words that occurred frequently with our source word will have a higher fractional count, and therefore a higher translation probability.

In the next iteration, we apply our newly learned probabilities to our data, collect the fractional counts etc. We can run this algorithm for a limited number of iterations or check for convergence (the conditional probabilities stop changing). In this simple model, we are guaranteed to find a solution which is globally optimal for our data.

Model Limitations

IBM Model 1 only looks at lexical translation probability. It has no notion of word position so it is happy to align two neighboring words in the source sentence to two completely different positions in the target. It also disregards how many alignment links lead to a particular word (it does not model the so-called word fertility), so in some cases, it can even align the whole source sentence to a single target-side word, leaving other target words unaligned. Higher IBM models address these limitations with probability estimates of distortion and fertility.

Exercises

See Also

- Adam Lopez' lecture on IBM model 1 and the accompanying slides

- Philipp Koehn's slides (the slides contain the pseudo-code for IBM1)

References

- ↑ Peter F. Brown, Stephen A. Della Pietra, Vincent J. Della Pietra, Robert L. Mercer The Mathematics of Statistical Machine Translation: Parameter Estimation